Research Projects

Container Workflows for HPC

Containerisation shows enormous potential for both simulation and analysis workflows. This project encompasses a range of mini-app tests of the applicability of containers for existing HPC workflows as well as full-on integration of significant codes within containers.

Metadata Systems for Environmental Science

Standards, Provenance and Interfaces

Managing large scale data systems requires metadata systems to match.

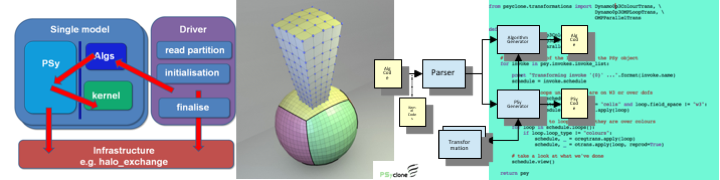

LFRic and PSyclone

Domain Specific Language and Compiler for Weather and Climate.

Splitting science code from the nitty gritty details of exploiting parallelism on specific computer architectures using DSLs for weather and climate : PSyclone and the new LFRic Weather and Climate Model.

JASMIN

Research for Big Data Systems

Managing and analysing petascale data requires dedicated computing systems with a specialised software environment. We have provided leadership in the development of JASMIN as well as investigating real scientific workflows, developing infrastructure software, and understanding how best to deploy the necessary hardware and software infrastructure.

ExCALIDATA

Workflow and Data systems for the exascale era

Two projects addressing a) optimal use of storage by developing new storage interfaces which hide storage complexity, and b) minimising data movement within simulations and in analysis workflows.

Data Reduction

Techniques for intelligently reduce data volume

Volumes of data being generated by simulations and next generation observation platforms are huge, but not all the data being produced needs to be stored as is. We are investigating techniques to identify data that does not need to be stored (and conversely identify data that is important), and to compress data when it does need to be stored.

ESiWACE

The European Centre for Excellence in Weather and Climate Computing

ACES involvement in ESIWACE includes developing new I/O sub-systems capable of handling pre-exascale data flows both at simulation time, and in the analysis environment.